Olmo2 Template

Olmo2 Template - Get up and running with large language models. You can also install from pypi with: The olmo2 model is the successor of the olmo model, which was proposed in olmo: A great collection of flexible & creative landing page templates to promote your software, app, saas, startup or business projects. Rmsnorm is used instead of standard layer norm. Olmo is a series of o pen l anguage mo dels designed to enable the science of language models. By running this model on a jupyter notebook, you can avoid using the terminal, simplifying the process and reducing setup time. Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. First, install pytorch following the instructions specific to your operating system. It is designed by scientists, for scientists. We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. Get up and running with large language models. A great collection of flexible & creative landing page templates to promote your software, app, saas, startup or business projects. Norm is applied to attention queries and keys. Official training scripts for various model sizes can be found in src/scripts/train/. Throughput numbers from these scripts with various different configuration settings are reported below, measured on a cluster with nvidia h100 gpus. Accelerating the science of language models. Unlike many industry peers, olmo 2 ensures complete transparency, releasing training data, code, recipes, and even intermediate checkpoints. You can also install from pypi with: Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. Accelerating the science of language models. Get up and running with large language models. A great collection of flexible & creative landing page templates to promote your software, app, saas, startup or business projects. We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. The olmo2 model is the successor of the. The architectural changes from the original olmo model to this model are: We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. To see the exact usage for each script, run the script without any arguments. Olmo 2 is a new family of 7b and 13b models trained on up to 5t. Explore olmo 2’s architecture, training methodology, and performance benchmarks. We are releasing all code, checkpoints, logs (coming soon), and associated training details. Check out the olmo 2 paper or tülu 3 paper for more details! We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. The architectural changes from the original olmo. Norm is applied to attention queries and keys. Accelerating the science of language models. We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. Olmo is a series of o pen l anguage mo dels designed to enable the science of language models. Olmo 2 is a new family of 7b and. First, install pytorch following the instructions specific to your operating system. The architectural changes from the original olmo model to this model are: We are releasing all code, checkpoints, logs (coming soon), and associated training details. Learn how to run olmo 2 locally using gradio and langchain. Official training scripts for various model sizes can be found in src/scripts/train/. Olmo is a series of o pen l anguage mo dels designed to enable the science of language models. Get up and running with large language models. Accelerating the science of language models. Unlike many industry peers, olmo 2 ensures complete transparency, releasing training data, code, recipes, and even intermediate checkpoints. It is designed by scientists, for scientists. Olmo is a series of o pen l anguage mo dels designed to enable the science of language models. It is used to instantiate an olmo2 model according to the specified arguments, defining the model architecture. These models are trained on the dolma dataset. First, install pytorch following the instructions specific to your operating system. Accelerating the science of language. Check out the olmo 2 paper or tülu 3 paper for more details! Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. We are releasing all code, checkpoints, logs (coming soon), and associated training details. To see the exact usage for each script, run the script without any arguments. Olmo 2 builds. You can also install from pypi with: We are releasing all code, checkpoints, logs (coming soon), and associated training details. Official training scripts for various model sizes can be found in src/scripts/train/. Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. By running this model on a jupyter notebook, you can avoid. A great collection of flexible & creative landing page templates to promote your software, app, saas, startup or business projects. Accelerating the science of language models. You can also install from pypi with: Unlike many industry peers, olmo 2 ensures complete transparency, releasing training data, code, recipes, and even intermediate checkpoints. Norm is applied to attention queries and keys. Olmo 2 builds upon the foundation set by its predecessors, offering fully open language models with parameter sizes of 7 billion and 13 billion. We introduce olmo 2, a new family of 7b and 13b models trained on up to 5t tokens. It is designed by scientists, for scientists. Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. To see the exact usage for each script, run the script without any arguments. Olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. Explore olmo 2’s architecture, training methodology, and performance benchmarks. Get up and running with large language models. First, install pytorch following the instructions specific to your operating system. Norm is applied to attention queries and keys. By running this model on a jupyter notebook, you can avoid using the terminal, simplifying the process and reducing setup time. Rmsnorm is used instead of standard layer norm. These models are trained on the dolma dataset. It is used to instantiate an olmo2 model according to the specified arguments, defining the model architecture. You can also install from pypi with: Learn how to run olmo 2 locally using gradio and langchain.Olmo software saas joomla 4 template Artofit

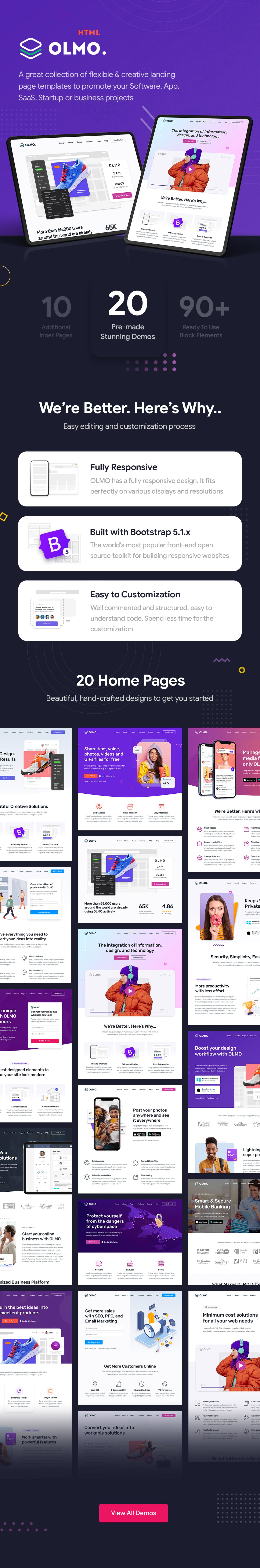

OLMO great collection of flexible & creative landing page templates

OLMO Software & SaaS HTML5 Template ThemeMag

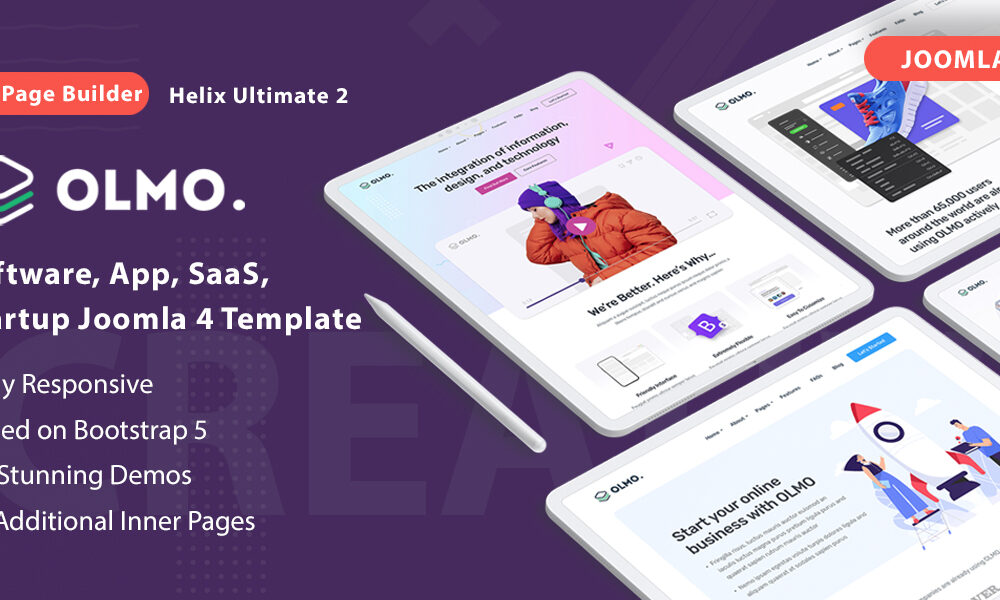

Joomla Template OLMO Software & SaaS Joomla 4 Template

SFT之后的OLMo模板跟OLMo meta template不一致,后续评测时需要修改 · Issue 3860 · hiyouga

OLMO Software and SaaS HTML5 Template freelancers business project

Macron 'Olmo' Template FIFA Kit Creator Showcase

Olmo 2 Sin Hojas PNG ,dibujos Botánico, Establecer, Provenir PNG Imagen

OLMO Software & SaaS HTML5 Template

OLMO Software & SaaS HTML5 Template App design layout, Saas, Html5

Unlike Many Industry Peers, Olmo 2 Ensures Complete Transparency, Releasing Training Data, Code, Recipes, And Even Intermediate Checkpoints.

A Great Collection Of Flexible & Creative Landing Page Templates To Promote Your Software, App, Saas, Startup Or Business Projects.

The Olmo2 Model Is The Successor Of The Olmo Model, Which Was Proposed In Olmo:

Throughput Numbers From These Scripts With Various Different Configuration Settings Are Reported Below, Measured On A Cluster With Nvidia H100 Gpus.

Related Post: