Mistral 7B Prompt Template

Mistral 7B Prompt Template - To evaluate the ability of the model to avoid. Let’s implement the code for inferences using the mistral 7b model in google colab. Explore mistral llm prompt templates for efficient and effective language model interactions. Models from the ollama library can be customized with a prompt. In this guide, we provide an overview of the mistral 7b llm and how to prompt with it. Explore mistral llm prompt templates for efficient and effective language model interactions. Learn the essentials of mistral prompt syntax with clear examples and concise explanations. Technical insights and best practices included. Technical insights and best practices included. From transformers import autotokenizer tokenizer =. Learn the essentials of mistral prompt syntax with clear examples and concise explanations. To evaluate the ability of the model to avoid. Technical insights and best practices included. You can use the following python code to check the prompt template for any model: Explore mistral llm prompt templates for efficient and effective language model interactions. It also includes tips, applications, limitations, papers, and additional reading materials related to. Models from the ollama library can be customized with a prompt. In this guide, we provide an overview of the mistral 7b llm and how to prompt with it. Projects for using a private llm (llama 2). It’s recommended to leverage tokenizer.apply_chat_template in order to prepare the tokens appropriately for the model. Today, we'll delve into these tokenizers, demystify any sources of debate, and explore how they work, the proper chat templates to use for each one, and their story within the community! It’s recommended to leverage tokenizer.apply_chat_template in order to prepare the tokens appropriately for the model. In this post, we will describe the process to get this model up and. We’ll utilize the free version with a single t4 gpu and load the model from hugging face. Let’s implement the code for inferences using the mistral 7b model in google colab. Learn the essentials of mistral prompt syntax with clear examples and concise explanations. Explore mistral llm prompt templates for efficient and effective language model interactions. Today, we'll delve into. Below are detailed examples showcasing various prompting. Litellm supports huggingface chat templates, and will automatically check if your huggingface model has a registered chat template (e.g. We’ll utilize the free version with a single t4 gpu and load the model from hugging face. Today, we'll delve into these tokenizers, demystify any sources of debate, and explore how they work, the. Explore mistral llm prompt templates for efficient and effective language model interactions. From transformers import autotokenizer tokenizer =. Models from the ollama library can be customized with a prompt. Prompt engineering for 7b llms : Litellm supports huggingface chat templates, and will automatically check if your huggingface model has a registered chat template (e.g. Today, we'll delve into these tokenizers, demystify any sources of debate, and explore how they work, the proper chat templates to use for each one, and their story within the community! Projects for using a private llm (llama 2). Technical insights and best practices included. Let’s implement the code for inferences using the mistral 7b model in google colab. Then. Prompt engineering for 7b llms : Perfect for developers and tech enthusiasts. In this guide, we provide an overview of the mistral 7b llm and how to prompt with it. Explore mistral llm prompt templates for efficient and effective language model interactions. Let’s implement the code for inferences using the mistral 7b model in google colab. You can use the following python code to check the prompt template for any model: We’ll utilize the free version with a single t4 gpu and load the model from hugging face. Perfect for developers and tech enthusiasts. It also includes tips, applications, limitations, papers, and additional reading materials related to. It’s recommended to leverage tokenizer.apply_chat_template in order to prepare. It also includes tips, applications, limitations, papers, and additional reading materials related to. Litellm supports huggingface chat templates, and will automatically check if your huggingface model has a registered chat template (e.g. Technical insights and best practices included. You can use the following python code to check the prompt template for any model: Today, we'll delve into these tokenizers, demystify. Explore mistral llm prompt templates for efficient and effective language model interactions. It’s recommended to leverage tokenizer.apply_chat_template in order to prepare the tokens appropriately for the model. Projects for using a private llm (llama 2). It also includes tips, applications, limitations, papers, and additional reading materials related to. Learn the essentials of mistral prompt syntax with clear examples and concise. Let’s implement the code for inferences using the mistral 7b model in google colab. Technical insights and best practices included. Litellm supports huggingface chat templates, and will automatically check if your huggingface model has a registered chat template (e.g. From transformers import autotokenizer tokenizer =. It’s recommended to leverage tokenizer.apply_chat_template in order to prepare the tokens appropriately for the model. Explore mistral llm prompt templates for efficient and effective language model interactions. Explore mistral llm prompt templates for efficient and effective language model interactions. In this guide, we provide an overview of the mistral 7b llm and how to prompt with it. Jupyter notebooks on loading and indexing data, creating prompt templates, csv agents, and using retrieval qa chains to query the custom data. It also includes tips, applications, limitations, papers, and additional reading materials related to. It’s recommended to leverage tokenizer.apply_chat_template in order to prepare the tokens appropriately for the model. Models from the ollama library can be customized with a prompt. From transformers import autotokenizer tokenizer =. Technical insights and best practices included. Let’s implement the code for inferences using the mistral 7b model in google colab. Perfect for developers and tech enthusiasts. Technical insights and best practices included. Projects for using a private llm (llama 2). Then we will cover some important details for properly prompting the model for best results. You can use the following python code to check the prompt template for any model: Today, we'll delve into these tokenizers, demystify any sources of debate, and explore how they work, the proper chat templates to use for each one, and their story within the community!An Introduction to Mistral7B Future Skills Academy

System prompt handling in chat templates for Mistral7binstruct

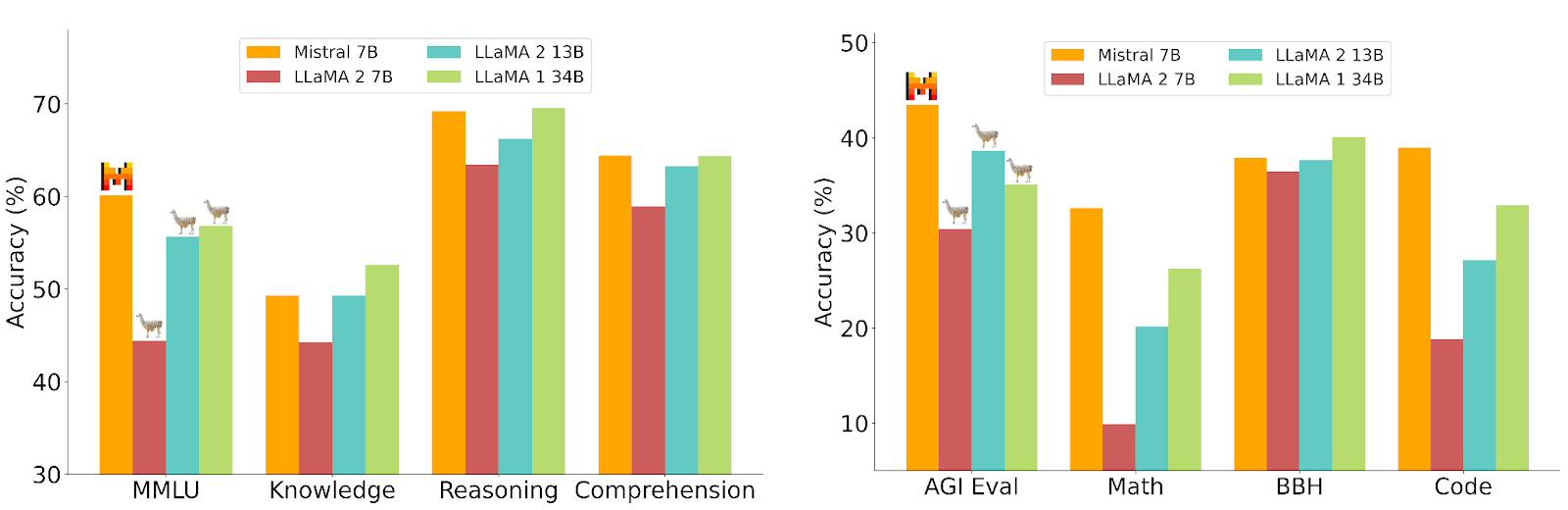

Mistral 7B Best Open Source LLM So Far

Mistral 7B better than Llama 2? Getting started, Prompt template

rreit/mistral7BInstructprompt at main

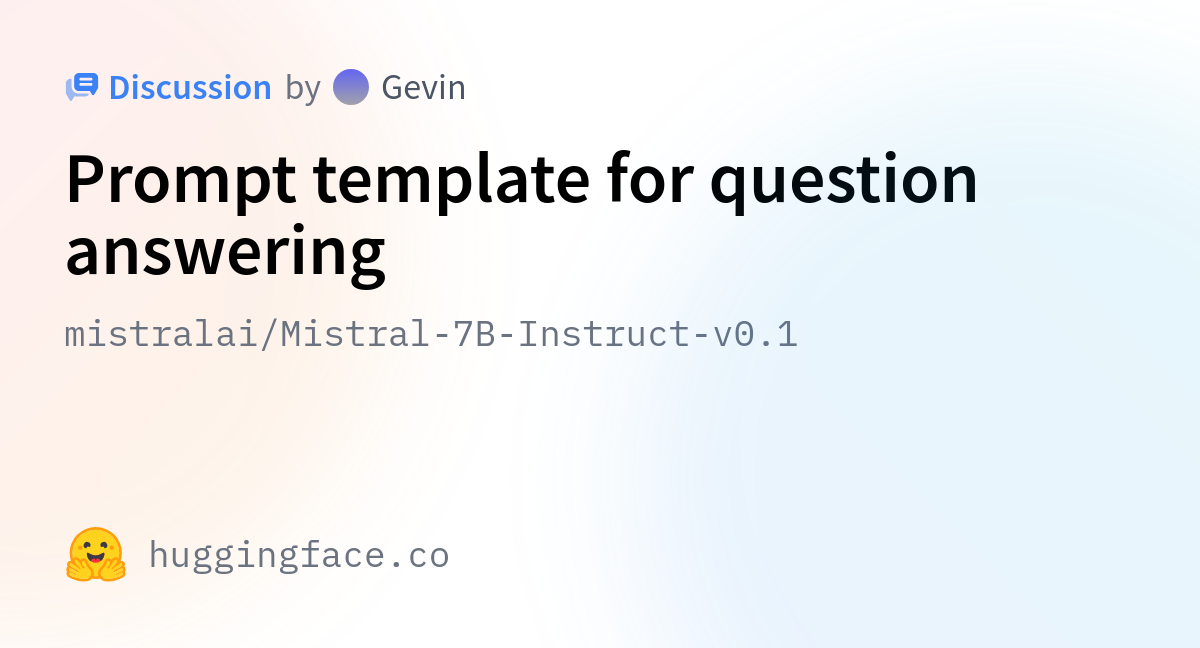

mistralai/Mistral7BInstructv0.1 · Prompt template for question answering

Mistral 7B LLM Prompt Engineering Guide

Getting Started with Mistral7bInstructv0.1

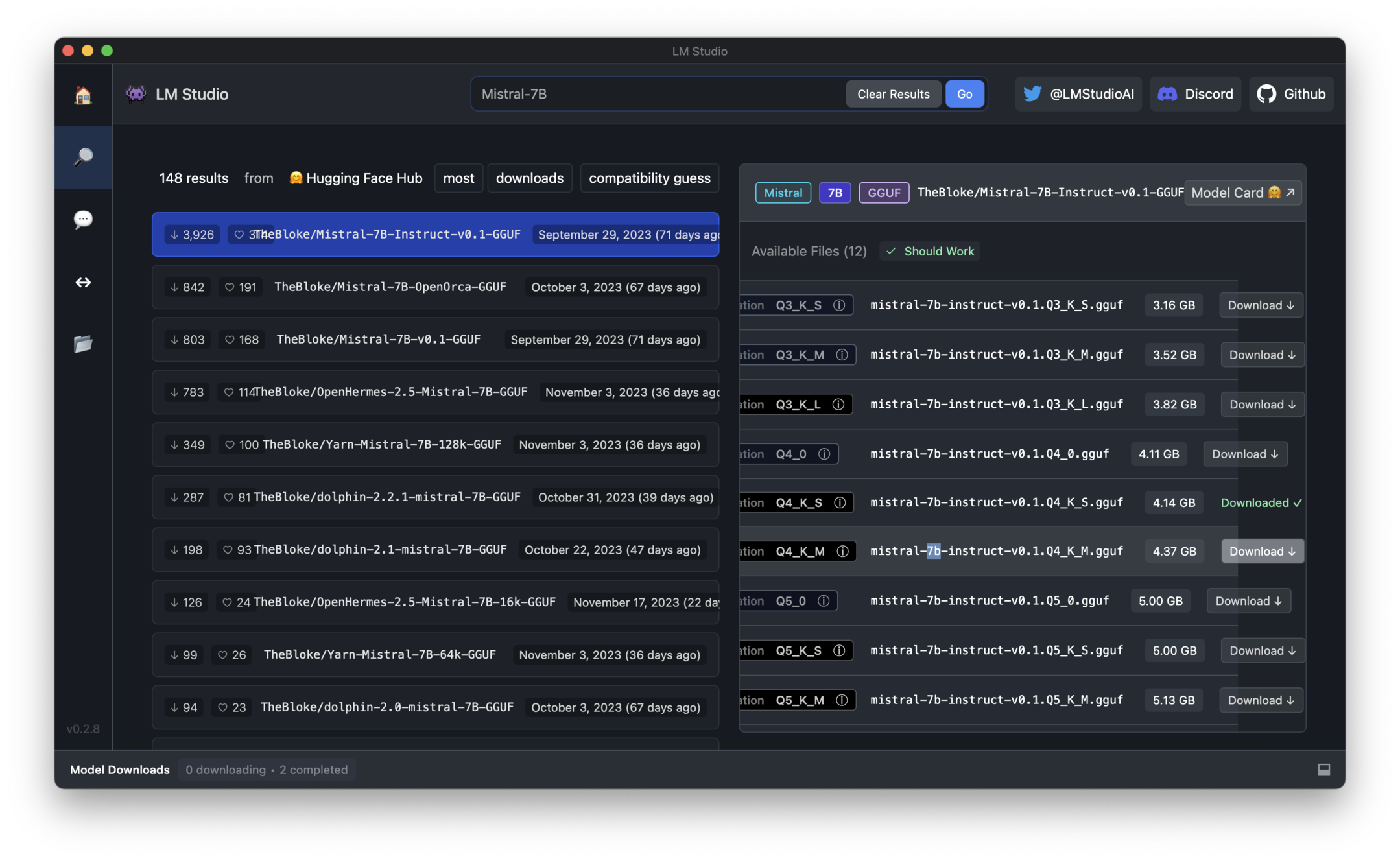

Mistral 7B Instruct Model library

mistralai/Mistral7BInstructv0.2 · system prompt template

Litellm Supports Huggingface Chat Templates, And Will Automatically Check If Your Huggingface Model Has A Registered Chat Template (E.g.

Below Are Detailed Examples Showcasing Various Prompting.

In This Post, We Will Describe The Process To Get This Model Up And Running.

To Evaluate The Ability Of The Model To Avoid.

Related Post: